Understanding the Risks of Uncontrolled Indexing

Allowing Google to index a website without control can pose significant risks to website owners. One of the primary concerns is the exposure of sensitive information, such as personal data, financial information, or confidential business details. When Google indexes a website, it crawls and caches all publicly accessible pages, making this sensitive information available to anyone who knows how to access it. This can lead to serious consequences, including identity theft, financial loss, and reputational damage.

Another risk associated with uncontrolled indexing is increased spam. When Google indexes a website, it can attract unwanted attention from spammers and hackers who seek to exploit vulnerabilities in the website’s code or content. This can result in a surge of spam comments, fake user accounts, and other malicious activities that can compromise the website’s security and performance.

Uncontrolled indexing can also lead to decreased website performance. When Google indexes a large number of pages on a website, it can put a significant strain on the website’s servers, leading to slower loading times, increased bandwidth usage, and higher hosting costs. This can negatively impact the user experience, leading to higher bounce rates, lower engagement, and reduced conversion rates.

Furthermore, uncontrolled indexing can also lead to duplicate content issues, where multiple versions of the same content are indexed by Google, leading to confusion and dilution of link equity. This can negatively impact the website’s search engine rankings, making it harder to attract organic traffic and generate leads.

Given these risks, it is essential for website owners to take control of their website’s indexing and ensure that only authorized content is made available to Google. By understanding the risks of uncontrolled indexing, website owners can take proactive steps to protect their website, their users, and their business.

Why Google Indexes Websites and How to Opt Out

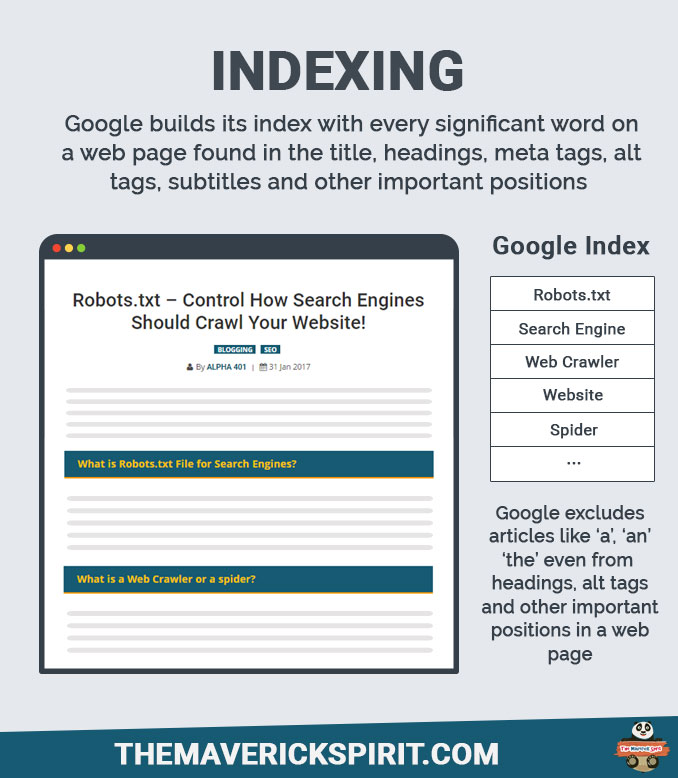

Google indexes websites to provide users with relevant and accurate search results. The search engine uses a complex algorithm to crawl, index, and rank websites based on their content, relevance, and authority. When a website is indexed, Google stores a copy of its pages in its massive database, making it possible for users to find the website through search queries.

However, not all website owners want their website to be indexed by Google. Some may have sensitive information, confidential data, or proprietary content that they do not want to be publicly accessible. Others may want to prevent Google from indexing certain pages or directories on their website. Fortunately, there are ways to opt out of Google indexing.

One way to opt out of Google indexing is to use the “noindex” meta tag. This tag tells Google not to index a specific page or website. Website owners can add this tag to their website’s HTML header to prevent Google from indexing their content. Another way to opt out is to use the “robots.txt” file, which instructs Googlebot not to crawl or index specific pages or directories.

It’s essential to note that opting out of Google indexing can have implications for website owners. For example, if a website is not indexed, it may not appear in search results, which can affect its visibility and traffic. Additionally, opting out of indexing may not prevent Google from crawling or storing a website’s content, as Google may still crawl and store content for other purposes, such as caching or archiving.

Website owners who want to keep their website out of Google’s index should carefully consider the implications of doing so. They should also ensure that they are using the correct methods to opt out, as incorrect use of meta tags or robots.txt files can lead to unintended consequences.

By understanding why Google indexes websites and how to opt out, website owners can take control of their website’s visibility and ensure that their content is not publicly accessible if they so choose. This can be particularly important for websites with sensitive information or proprietary content.

Using Robots.txt to Control Indexing

The robots.txt file is a crucial component in controlling indexing on a website. This file, located in the root directory of a website, provides instructions to search engine crawlers, such as Googlebot, on which pages or directories to crawl and index. By using the robots.txt file, website owners can prevent Google from indexing specific areas of their website, thereby keeping sensitive information or proprietary content out of the public eye.

To use the robots.txt file to control indexing, website owners need to create a file named “robots.txt” and place it in the root directory of their website. The file should contain specific instructions, known as “directives,” that tell Googlebot which pages or directories to crawl and index. For example, the “Disallow” directive can be used to prevent Googlebot from crawling and indexing specific pages or directories.

Here is an example of how to use the robots.txt file to prevent Googlebot from indexing a specific page:

User-agent: Googlebot Disallow: /private-page/

This code tells Googlebot not to crawl or index the “/private-page/” directory. Website owners can also use the “Allow” directive to specify which pages or directories are allowed to be crawled and indexed.

Another way to use the robots.txt file is to specify crawl delays, which can help prevent Googlebot from overwhelming a website’s servers. This can be particularly useful for websites with large amounts of content or high traffic volumes.

While the robots.txt file is an effective way to control indexing, it’s essential to note that it’s not a foolproof method. Googlebot may still crawl and index pages or directories that are not explicitly disallowed, and website owners should regularly monitor their website’s indexing settings to ensure that sensitive information is not being indexed.

By using the robots.txt file to control indexing, website owners can take a proactive approach to protecting their website’s sensitive information and proprietary content. This can help prevent unwanted indexing and ensure that a website’s online presence is secure and controlled.

Meta Tags: A Simple Way to Control Indexing

Meta tags are a simple yet effective way to control indexing on a website. These small pieces of code are placed in the HTML header of a webpage and provide instructions to search engine crawlers, such as Googlebot, on how to index the page. By using meta tags, website owners can prevent Google from indexing specific pages or directories, thereby keeping sensitive information or proprietary content out of the public eye.

Two of the most commonly used meta tags for controlling indexing are the “noindex” and “nofollow” tags. The “noindex” tag tells Googlebot not to index a specific page, while the “nofollow” tag tells Googlebot not to follow any links on the page. By using these tags, website owners can prevent Google from indexing sensitive areas of their website, such as login pages or confidential documents.

Here is an example of how to use the “noindex” meta tag:

<meta name="robots" content="noindex">

This code tells Googlebot not to index the page. Website owners can also use the “nofollow” meta tag to prevent Googlebot from following any links on the page:

<meta name="robots" content="nofollow">

Another way to use meta tags is to specify the crawl rate, which can help prevent Googlebot from overwhelming a website’s servers. This can be particularly useful for websites with large amounts of content or high traffic volumes.

While meta tags are an effective way to control indexing, it’s essential to note that they are not a foolproof method. Googlebot may still crawl and index pages or directories that are not explicitly disallowed, and website owners should regularly monitor their website’s indexing settings to ensure that sensitive information is not being indexed.

By using meta tags to control indexing, website owners can take a proactive approach to protecting their website’s sensitive information and proprietary content. This can help prevent unwanted indexing and ensure that a website’s online presence is secure and controlled.

In addition to using meta tags, website owners can also use other methods to control indexing, such as password protection and robots.txt files. By combining these methods, website owners can ensure that their website is protected from unwanted indexing and that sensitive information is kept out of the public eye.

Password Protection and Indexing

Password protection is a common method used to prevent unauthorized access to sensitive areas of a website. However, it can also be used to prevent Google from indexing these areas. By password-protecting sensitive pages or directories, website owners can prevent Googlebot from crawling and indexing them, thereby keeping sensitive information out of the public eye.

There are several ways to password-protect a website, including using HTTP authentication, cookies, or JavaScript. However, not all methods are effective in preventing Googlebot from indexing sensitive areas. For example, HTTP authentication can be easily bypassed by Googlebot, while cookies and JavaScript can be disabled or ignored.

A more effective method of password protection is to use a combination of HTTP authentication and IP blocking. This method involves restricting access to sensitive areas based on IP address, and requiring a username and password to access these areas. This method is more secure than HTTP authentication alone, as it requires both a valid IP address and a valid username and password to access sensitive areas.

Another method of password protection is to use a Content Delivery Network (CDN) with built-in password protection. CDNs can be configured to require a username and password to access sensitive areas, and can also be used to block IP addresses that are known to be associated with Googlebot.

While password protection can be an effective method of preventing Google from indexing sensitive areas, it is not foolproof. Googlebot can still crawl and index password-protected areas if the password is weak or if the password protection method is not properly configured. Therefore, it is essential to use strong passwords and to regularly monitor indexing settings to ensure that sensitive information is not being indexed.

In addition to password protection, website owners can also use other methods to prevent Google from indexing sensitive areas, such as using robots.txt files and meta tags. By combining these methods, website owners can ensure that their website is protected from unwanted indexing and that sensitive information is kept out of the public eye.

Using Google Search Console to Manage Indexing

Google Search Console is a powerful tool that allows website owners to manage and monitor their website’s indexing settings. By using Google Search Console, website owners can ensure that their website is properly indexed and that sensitive information is not being indexed.

One of the key features of Google Search Console is the ability to monitor and control indexing settings. Website owners can use the “Index” section of the console to see which pages and directories are being indexed, and to request that specific pages or directories be removed from the index.

Another feature of Google Search Console is the ability to set crawl rates and crawl frequencies. This allows website owners to control how often Googlebot crawls and indexes their website, which can help to prevent over-crawling and reduce the load on the website’s servers.

Google Search Console also provides website owners with detailed reports on indexing errors and warnings. These reports can help website owners to identify and fix issues that may be preventing their website from being properly indexed.

In addition to these features, Google Search Console also provides website owners with the ability to submit sitemaps and URLs for indexing. This allows website owners to ensure that their website’s content is being properly indexed and that sensitive information is not being indexed.

By using Google Search Console, website owners can take a proactive approach to managing their website’s indexing settings and ensuring that sensitive information is not being indexed. This can help to prevent unwanted indexing and reduce the risk of sensitive information being exposed.

It’s also important to note that Google Search Console is a free tool, and it’s available to all website owners. By using this tool, website owners can ensure that their website is properly indexed and that sensitive information is not being indexed.

In conclusion, Google Search Console is a powerful tool that allows website owners to manage and monitor their website’s indexing settings. By using this tool, website owners can ensure that their website is properly indexed and that sensitive information is not being indexed.

Common Mistakes to Avoid When Trying to Keep Google from Indexing Your Website

When trying to keep Google from indexing your website, there are several common mistakes that website owners make. These mistakes can lead to unwanted indexing, decreased website performance, and even penalties from Google.

One of the most common mistakes is incorrect use of robots.txt files. Robots.txt files are used to instruct Googlebot on which pages or directories to crawl and index. However, if the file is not properly formatted or contains incorrect instructions, Googlebot may ignore it or crawl and index pages that should be excluded.

Another common mistake is incorrect use of meta tags. Meta tags, such as the “noindex” and “nofollow” tags, are used to control indexing and crawling. However, if these tags are not properly implemented or are used incorrectly, Googlebot may ignore them or crawl and index pages that should be excluded.

Another mistake is not regularly monitoring and maintaining website indexing settings. Website owners should regularly check their website’s indexing settings to ensure that sensitive information is not being indexed and that Googlebot is not crawling and indexing pages that should be excluded.

Not using password protection is another common mistake. Password protection can be used to prevent Googlebot from crawling and indexing sensitive areas of a website. However, if password protection is not used, Googlebot may crawl and index these areas, potentially exposing sensitive information.

Not using Google Search Console is another mistake. Google Search Console provides website owners with the ability to monitor and control indexing settings, as well as identify and fix issues that may be preventing their website from being properly indexed.

By avoiding these common mistakes, website owners can ensure that their website is properly indexed and that sensitive information is not being exposed. Regular monitoring and maintenance of website indexing settings, proper use of robots.txt files and meta tags, and use of password protection and Google Search Console can all help to prevent unwanted indexing and ensure that a website is properly indexed.

Best Practices for Keeping Your Website Out of Google’s Index

Keeping your website out of Google’s index requires regular monitoring and maintenance of your website’s indexing settings. Here are some best practices to help you achieve this:

Regularly review your website’s indexing settings to ensure that sensitive information is not being indexed. Use tools like Google Search Console to monitor your website’s indexing settings and identify any issues that may be preventing your website from being properly indexed.

Use robots.txt files and meta tags to control indexing and crawling. Robots.txt files can be used to instruct Googlebot on which pages or directories to crawl and index, while meta tags can be used to control indexing and crawling on a page-by-page basis.

Use password protection to prevent Googlebot from crawling and indexing sensitive areas of your website. Password protection can be used to prevent Googlebot from accessing sensitive information, such as login pages or confidential documents.

Regularly update your website’s indexing settings to reflect any changes to your website’s content or structure. This can help prevent Googlebot from crawling and indexing outdated or irrelevant content.

Use Google Search Console to monitor and control indexing of your website. Google Search Console provides website owners with the ability to monitor and control indexing settings, as well as identify and fix issues that may be preventing their website from being properly indexed.

By following these best practices, website owners can ensure that their website is properly indexed and that sensitive information is not being exposed. Regular monitoring and maintenance of website indexing settings, proper use of robots.txt files and meta tags, and use of password protection and Google Search Console can all help to prevent unwanted indexing and ensure that a website is properly indexed.

In addition to these best practices, website owners should also be aware of the potential risks of allowing Google to index their website without control. These risks include exposure of sensitive information, increased spam, and decreased website performance.

By understanding these risks and taking steps to prevent unwanted indexing, website owners can protect their website and ensure that sensitive information is not being exposed.