Understanding the Importance of Search Exclusions

Excluding certain content from Google search results is crucial for maintaining online reputation, protecting sensitive information, and improving search engine optimization (SEO). When content is not properly excluded, it can lead to unwanted visibility, compromising personal or business interests. For instance, excluding outdated or irrelevant content can help prevent it from appearing in search results, thereby maintaining a positive online presence. Moreover, excluding sensitive information, such as personal data or confidential business details, is essential for protecting against data breaches and cyber threats.

Search exclusions also play a significant role in SEO. By excluding low-quality or duplicate content, websites can improve their overall search engine ranking and increase their online visibility. This, in turn, can drive more traffic to the website, generating leads and sales. Furthermore, excluding content that is not relevant to the target audience can help improve the website’s user experience, leading to increased engagement and conversion rates.

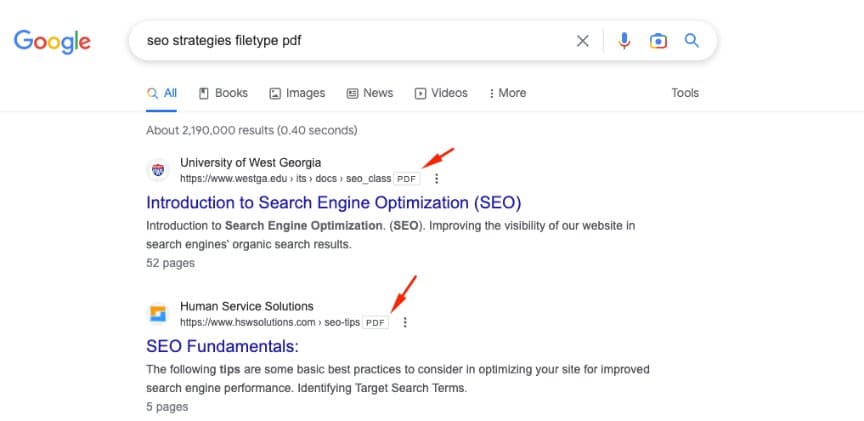

In some cases, content may need to be excluded from Google search results due to copyright or intellectual property concerns. For example, if a website is hosting copyrighted material without permission, excluding it from search results can help prevent copyright infringement claims. Similarly, excluding content that is not compliant with Google’s guidelines can help prevent penalties and maintain a good standing with the search engine.

It is essential to note that excluding content from Google search results requires careful consideration and planning. Website owners and administrators must ensure that the content they exclude is not essential for their online presence or business operations. Moreover, they must also ensure that the exclusion methods used are compliant with Google’s guidelines and do not compromise the website’s overall SEO.

By understanding the importance of search exclusions, website owners and administrators can take proactive steps to protect their online reputation, sensitive information, and SEO. This includes using various exclusion methods, such as robots.txt files, meta tags, and URL parameters, to control how search engines crawl and index their website content.

As the online landscape continues to evolve, the need for effective search exclusions will only continue to grow. By staying ahead of the curve and implementing best practices for search exclusions, website owners and administrators can ensure their online presence remains secure, relevant, and optimized for success.

How to Remove Personal Information from Google Search

Removing personal information from Google search results is a crucial step in maintaining online privacy and security. When personal information appears in search results, it can be accessed by anyone, including potential employers, creditors, and even identity thieves. Fortunately, there are steps that can be taken to remove personal information from Google search results.

The first step in removing personal information from Google search results is to identify the source of the information. This can be a website, social media profile, or other online platform. Once the source has been identified, it is essential to contact the website administrator or platform owner to request removal of the personal information.

Google also provides a URL removal tool that can be used to remove personal information from search results. This tool allows users to request removal of specific URLs that contain personal information. To use the tool, users must first verify ownership of the website or content, and then submit a removal request to Google.

Another way to remove personal information from Google search results is to use the “do not include in google search” feature. This feature allows users to specify which pages or content should not be indexed by Google. To use this feature, users must add a meta tag to the HTML header of the webpage, specifying that the page should not be indexed.

It is also essential to note that removing personal information from Google search results can be a time-consuming process. It may take several days or even weeks for the information to be removed from search results. Additionally, it is crucial to ensure that the personal information is removed from the source website or platform, as Google will continue to index the information if it remains available online.

In some cases, personal information may be removed from Google search results due to copyright or intellectual property concerns. For example, if a website is hosting copyrighted material without permission, Google may remove the content from search results to prevent copyright infringement.

By taking proactive steps to remove personal information from Google search results, individuals can help protect their online privacy and security. This includes using Google’s URL removal tool, contacting website administrators, and using the “do not include in google search” feature.

It is also essential to be aware of the potential risks associated with removing personal information from Google search results. For example, removing information from search results may not necessarily remove it from the internet entirely. Additionally, removing information from search results may not prevent it from being accessed by other means, such as through social media or other online platforms.

The Role of Robots.txt in Search Exclusions

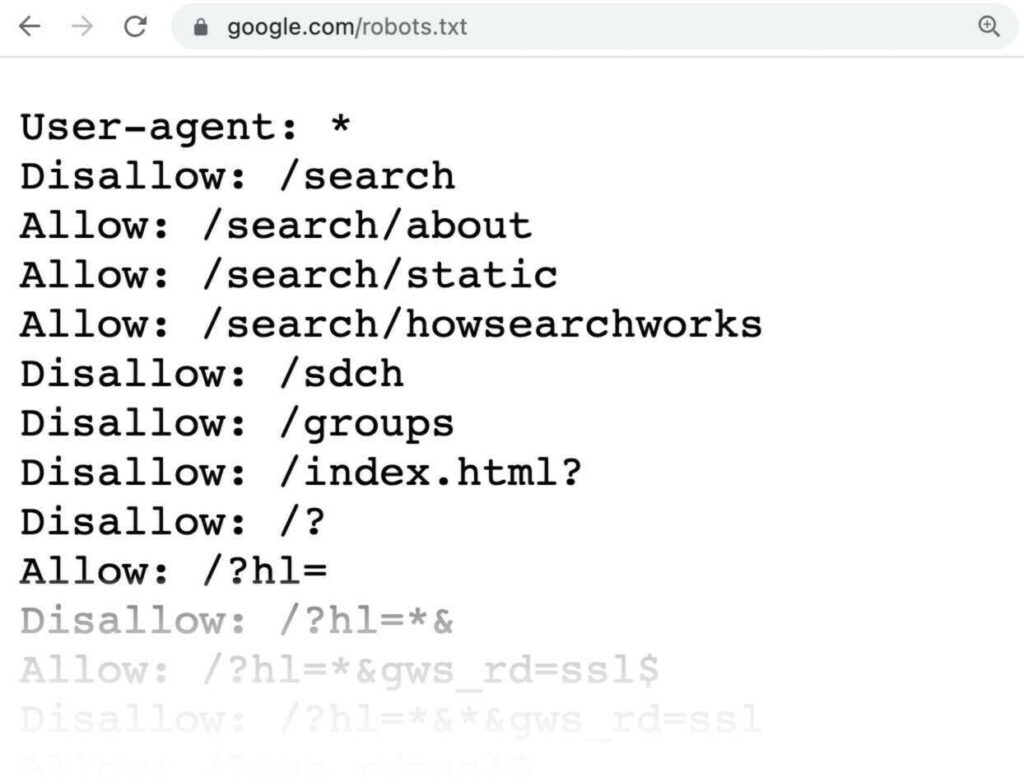

The robots.txt file is a crucial component in controlling how search engines crawl and index website content. This file, also known as the “robots exclusion standard,” provides instructions to search engine crawlers on which pages or directories to crawl and index, and which to exclude.

By using the robots.txt file, website owners can specify which pages or directories should not be crawled or indexed by search engines. This can be useful for excluding sensitive information, such as login pages or administrative directories, from search results. Additionally, the robots.txt file can be used to exclude low-quality or duplicate content from search results, which can help improve the overall quality of a website’s search engine rankings.

To use the robots.txt file for search exclusions, website owners must first create the file and add it to the root directory of their website. The file should contain instructions for search engine crawlers, using the “Disallow” directive to specify which pages or directories to exclude. For example, to exclude a specific page from search results, the following code can be added to the robots.txt file:

User-agent: * Disallow: /path/to/excluded/page

This code instructs all search engine crawlers to exclude the specified page from their index. Website owners can also use the “Allow” directive to specify which pages or directories should be crawled and indexed, even if they are located in a directory that is otherwise excluded.

It’s essential to note that the robots.txt file is not a foolproof method for excluding content from search results. Search engines may still crawl and index content that is excluded in the robots.txt file, especially if the content is linked to from other pages on the website. Additionally, the robots.txt file can be used to exclude content from search results, but it cannot be used to remove content that has already been indexed by search engines.

Despite these limitations, the robots.txt file remains a powerful tool for controlling how search engines crawl and index website content. By using the file effectively, website owners can help improve the quality of their search engine rankings, protect sensitive information, and ensure that their website is accurately represented in search results.

Best practices for using the robots.txt file include regularly reviewing and updating the file to ensure that it is accurate and effective, using the “Disallow” directive to exclude sensitive information and low-quality content, and testing the file to ensure that it is working correctly.

Using Meta Tags to Control Search Engine Indexing

Meta tags are a crucial component in controlling how search engines index and crawl website content. By using meta tags, website owners can specify which pages or directories should be indexed, and which should be excluded from search results.

One of the most commonly used meta tags for search exclusion is the “noindex” tag. This tag instructs search engines not to index a specific page or directory, effectively excluding it from search results. To use the “noindex” tag, website owners can add the following code to the HTML header of the page:

This code instructs all search engines to exclude the page from their index. Website owners can also use the “nofollow” tag to prevent search engines from following links on a specific page or directory. This can be useful for excluding low-quality or duplicate content from search results.

Another meta tag that can be used for search exclusion is the “robots” tag. This tag provides instructions to search engine crawlers on how to crawl and index website content. By using the “robots” tag, website owners can specify which pages or directories should be crawled, and which should be excluded.

For example, to exclude a specific page from search results using the “robots” tag, website owners can add the following code to the HTML header of the page:

This code instructs all search engines to exclude the page from their index and not to follow any links on the page.

Best practices for using meta tags for search exclusion include regularly reviewing and updating meta tags to ensure that they are accurate and effective, using the “noindex” and “nofollow” tags to exclude sensitive information and low-quality content, and testing meta tags to ensure that they are working correctly.

By using meta tags effectively, website owners can help improve the quality of their search engine rankings, protect sensitive information, and ensure that their website is accurately represented in search results.

It’s also important to note that meta tags are not a foolproof method for excluding content from search results. Search engines may still crawl and index content that is excluded using meta tags, especially if the content is linked to from other pages on the website.

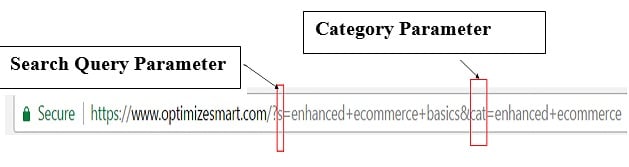

Excluding Content from Google Search Using URL Parameters

URL parameters are a useful tool for excluding specific content from Google search results. By adding specific parameters to a URL, website owners can instruct Google to exclude the content from their index.

One common way to use URL parameters for search exclusion is to add a “noindex” parameter to the URL. This parameter instructs Google not to index the content, effectively excluding it from search results. For example, to exclude a specific page from search results using the “noindex” parameter, website owners can add the following code to the URL:

http://example.com/page?noindex=1

This code instructs Google not to index the page, effectively excluding it from search results.

Another way to use URL parameters for search exclusion is to add a “nofollow” parameter to the URL. This parameter instructs Google not to follow any links on the page, effectively excluding the content from search results. For example, to exclude a specific page from search results using the “nofollow” parameter, website owners can add the following code to the URL:

http://example.com/page?nofollow=1

This code instructs Google not to follow any links on the page, effectively excluding the content from search results.

Best practices for using URL parameters for search exclusion include regularly reviewing and updating URL parameters to ensure that they are accurate and effective, using the “noindex” and “nofollow” parameters to exclude sensitive information and low-quality content, and testing URL parameters to ensure that they are working correctly.

By using URL parameters effectively, website owners can help improve the quality of their search engine rankings, protect sensitive information, and ensure that their website is accurately represented in search results.

It’s also important to note that URL parameters are not a foolproof method for excluding content from search results. Google may still crawl and index content that is excluded using URL parameters, especially if the content is linked to from other pages on the website.

Additionally, website owners should be aware that using URL parameters for search exclusion can have unintended consequences, such as affecting the website’s crawl rate or indexation. Therefore, it’s essential to carefully consider the use of URL parameters for search exclusion and to test them thoroughly before implementing them on a live website.

Best Practices for Search Exclusion: Tips and Tricks

When it comes to excluding content from Google search results, it’s essential to follow best practices to ensure effective and efficient exclusion. Here are some expert tips and tricks to help you master search exclusions and maintain online reputation, protect sensitive information, and improve SEO.

To avoid common mistakes, it’s crucial to understand the difference between “do not include in Google search” and “remove from Google search.” While the former prevents new content from being indexed, the latter removes existing content from search results. Make sure to use the correct approach depending on your needs.

Another best practice is to use the robots.txt file in conjunction with meta tags to control how search engines crawl and index your website content. By specifying which pages or directories to exclude, you can prevent sensitive information from being indexed and improve your website’s overall security.

When using URL parameters to exclude content, make sure to use the correct syntax and parameters. For example, using the “url?parameter” syntax can help exclude specific content from Google search results. However, be cautious not to overuse URL parameters, as this can lead to crawl errors and negatively impact your website’s SEO.

Regularly monitoring your website’s crawl errors and search console reports can also help identify potential issues with search exclusions. By addressing these issues promptly, you can prevent unwanted content from being indexed and maintain a clean online reputation.

Finally, it’s essential to keep in mind that search exclusions are not a one-time task. As your website evolves, new content may be added, and existing content may need to be updated or removed. Regularly reviewing and updating your search exclusions can help ensure that your online presence remains accurate and up-to-date.

By following these best practices and tips, you can effectively exclude unwanted content from Google search results and maintain a strong online presence. Remember to always prioritize online reputation, protect sensitive information, and improve SEO to achieve long-term success in the digital landscape.

Common Challenges and Solutions in Search Exclusion

When attempting to exclude content from Google search results, several challenges may arise. Understanding these challenges and their solutions can help ensure effective search exclusion and maintain online reputation, protect sensitive information, and improve SEO.

One common challenge is the difficulty in removing outdated or obsolete content from Google search results. This can be due to the content being cached or indexed by Google, making it hard to remove. To overcome this, use Google’s URL removal tool to request the removal of outdated content. Additionally, update the content on your website to reflect the changes, and use the “noindex” meta tag to prevent the outdated content from being re-indexed.

Another challenge is the inability to exclude content from Google search results due to crawl errors or website configuration issues. To resolve this, regularly monitor your website’s crawl errors and search console reports to identify potential issues. Fixing these issues promptly can help prevent unwanted content from being indexed and improve your website’s overall SEO.

Some website administrators may also face challenges in excluding content from Google search results due to the complexity of their website’s architecture. To overcome this, use the robots.txt file to specify which pages or directories to exclude, and use meta tags to control how search engines index and crawl your website content.

In some cases, website administrators may not have control over the content they want to exclude from Google search results, such as user-generated content or third-party content. To address this, use the “nofollow” meta tag to prevent search engines from crawling and indexing the content, and consider using a content delivery network (CDN) to host the content and control its indexing.

Finally, some website administrators may experience challenges in excluding content from Google search results due to the limitations of the “do not include in Google search” feature. To overcome this, use a combination of search exclusion methods, such as using the robots.txt file, meta tags, and URL parameters, to ensure effective exclusion of unwanted content.

By understanding these common challenges and their solutions, website administrators can effectively exclude unwanted content from Google search results and maintain a strong online presence. Remember to regularly monitor your website’s search exclusion efforts and adjust your strategies as needed to ensure long-term success.

Conclusion: Mastering Search Exclusions for Online Success

In conclusion, mastering search exclusions is crucial for maintaining online reputation, protecting sensitive information, and improving search engine optimization (SEO). By understanding the importance of search exclusions and implementing effective strategies, website administrators can ensure that their online presence is accurate, secure, and optimized for search engines.

Throughout this comprehensive guide, we have explored the various methods for excluding content from Google search results, including using the robots.txt file, meta tags, URL parameters, and Google’s URL removal tool. We have also discussed best practices for search exclusion, common challenges, and solutions for overcoming these challenges.

By applying the knowledge and techniques outlined in this guide, website administrators can effectively exclude unwanted content from Google search results and maintain a strong online presence. Remember, mastering search exclusions is an ongoing process that requires regular monitoring and adjustments to ensure long-term success.

As the digital landscape continues to evolve, the importance of search exclusions will only continue to grow. By staying ahead of the curve and mastering search exclusions, website administrators can protect their online reputation, safeguard sensitive information, and improve their website’s visibility and ranking in search engine results.

In today’s digital age, it is essential to have control over what content is included in Google search results. By using the techniques outlined in this guide, website administrators can ensure that their online presence is accurate, secure, and optimized for search engines, and avoid the negative consequences of unwanted content being indexed. Don’t let unwanted content harm your online reputation – master search exclusions today and take control of your online presence.