Understanding Google’s Indexing Process

Google’s indexing process is a complex algorithm that determines which websites to include in its search results. The process involves crawling, indexing, and ranking. Crawling refers to the discovery of new and updated content on the web, while indexing involves organizing and storing this content in massive databases. Ranking, on the other hand, determines the order in which websites appear in search results. To effectively remove a site from Google’s index, it’s essential to understand how these processes work together.

Google uses various factors to determine which sites to include in its index, including relevance, authority, and user experience. The search engine’s algorithms analyze website content, structure, and links to determine its relevance and authority. Additionally, Google takes into account user behavior, such as click-through rates and time spent on a website, to determine its user experience.

When a website is crawled and indexed, Google creates a snapshot of its content, which is then stored in its databases. This snapshot is used to determine the website’s ranking in search results. However, if a website is removed or updated, the snapshot may not reflect these changes. This is where understanding Google’s indexing process becomes crucial in removing unwanted sites from its index.

By grasping how Google’s indexing process works, website owners and administrators can take steps to remove unwanted sites from the index. This includes using tools like the Google Search Console, robots.txt files, and meta tags to control how Google crawls and indexes website content. In the next section, we’ll explore the reasons why someone might want to block a site from Google search results.

Why You Might Want to Block a Site from Google Search Results

There are several reasons why someone might want to block a site from Google search results. One common reason is to remove outdated or irrelevant content that is no longer useful or accurate. This can help to improve the overall quality of search results and prevent users from accessing information that is no longer relevant.

Another reason to block a site from Google search results is to protect sensitive information. For example, a website may contain confidential or proprietary information that should not be publicly accessible. By blocking the site from Google’s index, website owners can help to prevent this information from being discovered by unauthorized individuals.

In some cases, website owners may want to block a site from Google search results to prevent malicious sites from appearing in search results. This can help to protect users from phishing scams, malware, and other types of online threats. By removing these sites from Google’s index, website owners can help to create a safer and more secure online environment.

Additionally, website owners may want to block a site from Google search results to remove duplicate or redundant content. This can help to improve the overall quality of search results and prevent users from accessing multiple versions of the same content.

Understanding the reasons why someone might want to block a site from Google search results is essential in determining the best approach to take. In the next section, we’ll explore the first method for blocking a site from Google search results: using the Google Search Console.

Method 1: Using the Google Search Console

The Google Search Console is a powerful tool that allows website owners to manage their site’s presence in Google search results. One of the features of the Search Console is the ability to request removal of a site from Google’s index. This can be a useful method for blocking a site from Google search results, especially if the site is no longer needed or is causing problems.

To use the Google Search Console to request removal of a site, follow these steps:

1. Sign in to your Google Search Console account and select the site you want to remove.

2. Click on the “Removals” tab and select “Remove a site” from the dropdown menu.

3. Enter the URL of the site you want to remove and select the reason for removal.

4. Click on the “Request removal” button to submit your request.

Google will review your request and remove the site from its index if it meets the removal criteria. This process can take several days to several weeks, depending on the complexity of the request.

The benefits of using the Google Search Console to request removal of a site include:

• Easy to use: The Search Console provides a simple and straightforward process for requesting removal of a site.

• Fast results: Google will review your request and remove the site from its index quickly, usually within a few days.

• Permanent removal: Once a site is removed from Google’s index, it will not be re-indexed unless the site is updated or changed in some way.

However, it’s worth noting that the Google Search Console may not always be able to remove a site from its index. In some cases, the site may still be indexed even after a removal request has been submitted. In these cases, other methods, such as using the robots.txt file or meta tags, may be necessary to block the site from Google search results.

Method 2: Using the Robots.txt File

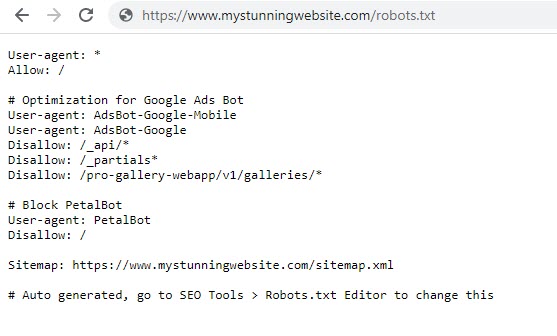

The robots.txt file is a text file that webmasters can use to communicate with web crawlers, such as Google’s crawler, about how to crawl and index their website. By using the robots.txt file, webmasters can specify which parts of their website should be crawled and indexed, and which parts should be ignored.

To block Google’s crawlers from indexing a site using the robots.txt file, follow these steps:

1. Create a new text file called “robots.txt” and upload it to the root directory of your website.

2. In the robots.txt file, specify the user agent (in this case, Google’s crawler) and the URL of the site you want to block.

For example:

User-agent: Googlebot

Disallow: /

This will block Google’s crawler from indexing the entire website.

Alternatively, you can specify specific URLs or directories to block:

User-agent: Googlebot

Disallow: /path/to/directory/

Or:

User-agent: Googlebot

Disallow: /path/to/file.html

The benefits of using the robots.txt file to block Google’s crawlers include:

• Easy to implement: Creating a robots.txt file is a simple process that can be completed by anyone with basic knowledge of HTML.

• Flexible: The robots.txt file allows you to specify which parts of your website should be crawled and indexed, and which parts should be ignored.

However, it’s worth noting that the robots.txt file is not a foolproof method for blocking Google’s crawlers. Google’s crawler may still index a site even if it is blocked in the robots.txt file, especially if the site is linked to from other websites.

In addition, the robots.txt file can be used in conjunction with other methods, such as meta tags, to block Google’s crawlers from indexing a site.

Method 3: Using Meta Tags

Meta tags are a type of HTML tag that provide information about a web page to search engines like Google. By using specific meta tags, you can instruct Google not to index a particular page or site. In this section, we’ll explore how to use meta tags to block Google from indexing a site.

The two most commonly used meta tags for blocking Google’s indexing are the “noindex” and “nofollow” tags.

The “noindex” tag tells Google not to index a particular page or site. To use this tag, simply add the following code to the head section of your HTML document:

This will instruct Google not to index the page or site.

The “nofollow” tag tells Google not to follow any links on a particular page or site. To use this tag, simply add the following code to the head section of your HTML document:

This will instruct Google not to follow any links on the page or site.

It’s worth noting that while meta tags can be an effective way to block Google’s indexing, they are not foolproof. Google may still index a site even if it has a “noindex” or “nofollow” tag, especially if the site is linked to from other websites.

In addition, meta tags can be used in conjunction with other methods, such as the robots.txt file, to block Google’s indexing.

Here’s an example of how to use both the “noindex” and “nofollow” tags together:

This will instruct Google not to index the page or site, and also not to follow any links on the page or site.

Additional Tips for Blocking Sites from Google Search Results

In addition to the methods discussed above, there are several other tips and best practices that can help you block sites from Google search results.

One effective way to block a site from Google search results is to use password protection. By requiring a username and password to access the site, you can prevent Google’s crawlers from indexing the site.

Another way to block a site from Google search results is to remove internal linking. By removing links to the site from other websites, you can reduce the site’s visibility and make it less likely to be indexed by Google.

Google’s URL removal tool is another useful resource for blocking sites from Google search results. This tool allows you to request the removal of specific URLs from Google’s index, which can be useful if you need to remove a site that is no longer relevant or is causing problems.

It’s also important to note that blocking a site from Google search results is not a one-time process. You will need to regularly monitor the site and update your blocking methods as needed to ensure that the site remains blocked.

Finally, it’s worth noting that blocking a site from Google search results is not always a straightforward process. There may be times when you need to use a combination of methods to effectively block a site, and there may be times when you need to seek the help of a professional to ensure that the site is properly blocked.

By following these tips and best practices, you can effectively block sites from Google search results and improve the overall quality of your online presence.

Common Mistakes to Avoid When Blocking Sites from Google Search Results

When trying to block a site from Google search results, there are several common mistakes to avoid. These mistakes can lead to ineffective blocking, wasted time, and even damage to your online reputation.

One common mistake is using incorrect robots.txt directives or meta tags. This can lead to Google’s crawlers ignoring your blocking attempts and continuing to index the site. To avoid this mistake, make sure to use the correct syntax and formatting for your robots.txt file and meta tags.

Another common mistake is neglecting to update internal linking. If you’re trying to block a site from Google search results, it’s essential to remove any internal links to the site from other websites. This will help prevent Google’s crawlers from discovering the site and indexing it.

Additionally, failing to regularly monitor the site’s indexing status can lead to ineffective blocking. Google’s indexing process is constantly evolving, and what works today may not work tomorrow. Regularly monitoring the site’s indexing status will help you stay on top of any changes and ensure that the site remains blocked.

Using the wrong tools or methods can also lead to ineffective blocking. For example, using a tool that only blocks Google’s crawlers from indexing a site, but not other search engines, may not be effective. Make sure to use a tool or method that blocks all search engines, not just Google.

Finally, neglecting to follow up with Google can lead to ineffective blocking. If you’re trying to block a site from Google search results, it’s essential to follow up with Google to ensure that the site has been successfully blocked. This can be done by using Google’s URL removal tool or by contacting Google’s support team.

By avoiding these common mistakes, you can ensure that your attempts to block a site from Google search results are successful and effective.

Conclusion: Successfully Removing Unwanted Sites from Google’s Index

Removing unwanted sites from Google’s index can be a challenging task, but with the right strategies and techniques, it can be done effectively. In this article, we have discussed various methods for blocking sites from Google search results, including using the Google Search Console, robots.txt file, and meta tags.

We have also provided additional tips and best practices for blocking sites from Google search results, such as using password protection, removing internal linking, and using Google’s URL removal tool. Additionally, we have discussed common mistakes to avoid when trying to block a site from Google search results, such as using incorrect robots.txt directives or meta tags, and neglecting to update internal linking.

By following the methods and tips outlined in this article, you can successfully remove unwanted sites from Google’s index and improve the overall quality of your online presence. Remember to always follow best practices and avoid common mistakes to ensure that your blocking efforts are effective.

In conclusion, blocking sites from Google search results is a crucial step in maintaining a positive online reputation and protecting sensitive information. By understanding how Google indexes websites and using the right strategies and techniques, you can effectively remove unwanted sites from Google’s index and achieve your online goals.