Why You Might Want to Exclude a Website from Google Search Results

Websites are often created with the intention of being visible to the public and attracting visitors. However, there are instances where website owners might want to exclude their site from Google search results. This could be due to various reasons such as removing outdated or irrelevant content, protecting sensitive information, or preventing unnecessary traffic. For instance, a website might have been created for a temporary event or promotion, and once the event has passed, the website is no longer relevant. In such cases, excluding the website from Google search results can help prevent confusion and maintain a clean online presence.

Another scenario where excluding a website from Google search results might be necessary is when sensitive information is involved. For example, a website might contain confidential data or personal information that should not be publicly accessible. By removing the website from Google’s index, website owners can ensure that this sensitive information is not inadvertently exposed to the public.

In some cases, website owners might want to exclude their site from Google search results to prevent unnecessary traffic. This could be due to various reasons such as the website being under construction, or the content not being suitable for public consumption. By excluding the website from Google’s index, website owners can prevent unwanted visitors and maintain a level of control over who accesses their site.

Understanding the reasons why a website owner might want to exclude their site from Google search results is crucial in determining the best course of action. By identifying the motivations behind this decision, website owners can take the necessary steps to remove their site from Google’s index and maintain a clean online presence. This is where learning how to exclude a website from Google search results becomes essential.

Fortunately, excluding a website from Google search results is a relatively straightforward process. Website owners can use various tools and techniques to remove their site from Google’s index, including the Google Search Console, the Robots Exclusion Protocol (REP), and noindex and nofollow meta tags. By utilizing these tools and techniques, website owners can effectively exclude their site from Google search results and maintain control over their online presence.

Understanding Google’s Crawling and Indexing Process

Google’s crawling and indexing process is a complex system that allows the search engine to discover, crawl, and index new content on the web. Understanding how this process works is essential for website owners who want to exclude their site from Google search results. Google’s crawlers, also known as spiders, are software programs that continuously scan the web for new and updated content.

The crawling process begins with a list of URLs that Google’s algorithms have determined are likely to contain new or updated content. These URLs are then crawled by Google’s spiders, which follow hyperlinks from one page to another, discovering new content and updating existing content in the process. Once a page has been crawled, its content is indexed, which means it is added to Google’s massive database of web pages.

Website owners can influence the crawling and indexing process by optimizing their website’s structure and content. This includes using descriptive and keyword-rich titles, meta descriptions, and headings, as well as ensuring that the website’s architecture is clear and easy to navigate. By optimizing their website, owners can improve its visibility in Google search results and increase the chances of their content being crawled and indexed.

However, for website owners who want to exclude their site from Google search results, understanding the crawling and indexing process is crucial. By knowing how Google’s crawlers work, website owners can take steps to prevent their site from being crawled and indexed, such as using the Robots Exclusion Protocol (REP) or noindex and nofollow meta tags. This can help prevent unwanted traffic and maintain a level of control over who accesses their site.

It’s also important to note that Google’s crawling and indexing process is not a one-time event, but rather an ongoing process. Google’s spiders continuously scan the web for new and updated content, which means that website owners need to be proactive in maintaining their website’s visibility and controlling who accesses their site. By understanding how Google’s crawling and indexing process works, website owners can take the necessary steps to exclude their site from Google search results and maintain a clean online presence.

Learning how to exclude a website from Google search results requires a comprehensive understanding of Google’s crawling and indexing process. By knowing how Google’s crawlers work and how to influence the crawling and indexing process, website owners can take control of their online presence and maintain a level of control over who accesses their site.

Using the Google Search Console to Remove a Website

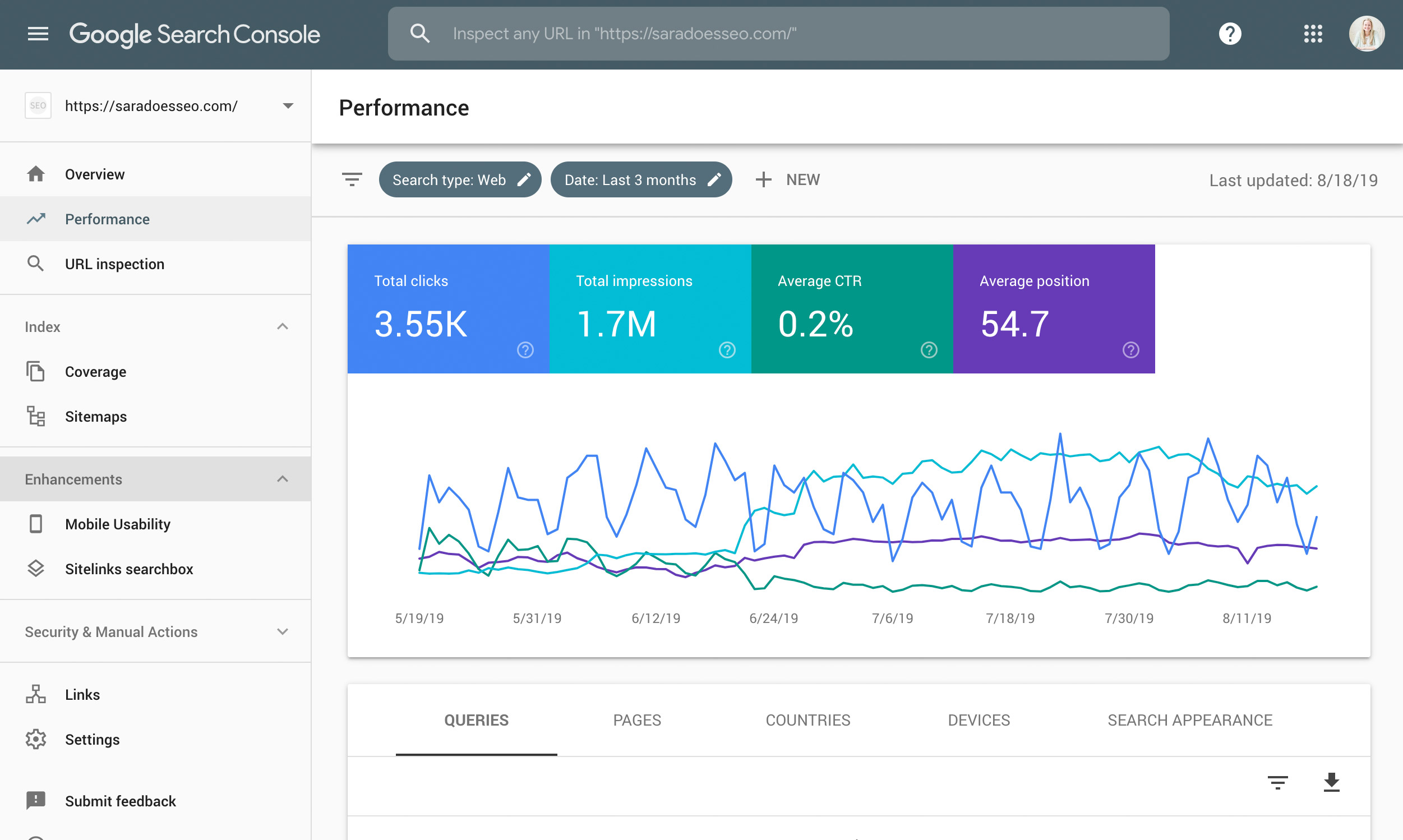

The Google Search Console is a powerful tool that allows website owners to manage their website’s presence in Google search results. One of the features of the Google Search Console is the ability to remove a website from Google search results. This can be useful for website owners who want to exclude their site from Google search results, either temporarily or permanently.

To remove a website from Google search results using the Google Search Console, website owners must first verify their site ownership. This can be done by adding a meta tag to the website’s homepage, uploading an HTML file to the website’s root directory, or by using the Google Analytics or Google Tag Manager verification methods.

Once site ownership has been verified, website owners can submit a removal request to Google. This can be done by navigating to the “Remove URLs” section of the Google Search Console and entering the URL of the website or page that they want to remove. Website owners can also specify the reason for the removal request, such as “obsolete content” or “sensitive information.”

After submitting the removal request, Google will review the request and remove the website or page from their search results. This process can take several days to several weeks, depending on the complexity of the request and the workload of Google’s review team.

It’s worth noting that removing a website from Google search results using the Google Search Console is a temporary solution. If website owners want to permanently exclude their site from Google search results, they will need to use other methods, such as the Robots Exclusion Protocol (REP) or noindex and nofollow meta tags.

Learning how to exclude a website from Google search results using the Google Search Console is an important skill for website owners who want to maintain control over their online presence. By following the steps outlined above, website owners can quickly and easily remove their site from Google search results and prevent unwanted traffic.

In addition to using the Google Search Console, website owners can also use other methods to remove their site from Google search results. These methods include using the Robots Exclusion Protocol (REP), creating a robots.txt file, and using noindex and nofollow meta tags. By combining these methods, website owners can ensure that their site is completely excluded from Google search results.

Utilizing the Robots Exclusion Protocol (REP)

The Robots Exclusion Protocol (REP) is a widely adopted standard that allows website owners to communicate with web crawlers, such as Google’s crawlers, about which pages or directories on their website should not be crawled or indexed. By using the REP, website owners can prevent Google’s crawlers from accessing specific pages or directories on their website, effectively excluding them from Google search results.

To use the REP, website owners need to create a robots.txt file and place it in the root directory of their website. The robots.txt file contains instructions for web crawlers, such as Google’s crawlers, about which pages or directories to crawl or index. By specifying the pages or directories that should not be crawled or indexed, website owners can prevent Google’s crawlers from accessing them.

The REP is a simple and effective way to exclude specific pages or directories from Google search results. However, it’s essential to note that the REP is not a foolproof method, and Google’s crawlers may still crawl or index pages or directories that are not explicitly excluded. To ensure that specific pages or directories are completely excluded from Google search results, website owners may need to use other methods, such as noindex and nofollow meta tags.

Learning how to use the REP is an essential skill for website owners who want to maintain control over their online presence. By using the REP, website owners can prevent Google’s crawlers from accessing specific pages or directories on their website, effectively excluding them from Google search results. This can be particularly useful for website owners who want to protect sensitive information or remove outdated or irrelevant content from Google search results.

When using the REP, it’s essential to follow best practices to ensure that the instructions in the robots.txt file are correctly interpreted by Google’s crawlers. This includes using the correct syntax and formatting, as well as specifying the correct pages or directories to exclude. By following best practices, website owners can ensure that their website is correctly crawled and indexed by Google, while also excluding specific pages or directories from Google search results.

In addition to using the REP, website owners can also use other methods to exclude specific pages or directories from Google search results. These methods include using noindex and nofollow meta tags, creating a robots.txt file, and using URL removal tools. By combining these methods, website owners can ensure that their website is completely excluded from Google search results.

Creating a robots.txt File to Block Google Crawlers

A robots.txt file is a text file that is placed in the root directory of a website to communicate with web crawlers, such as Google’s crawlers, about which pages or directories on the website should not be crawled or indexed. By creating a robots.txt file, website owners can block Google crawlers from accessing specific pages or directories on their website, effectively excluding them from Google search results.

To create a robots.txt file, website owners need to use a text editor, such as Notepad or TextEdit, to create a new file. The file should be named “robots.txt” and should be saved in the root directory of the website. The file should contain instructions for web crawlers, such as Google’s crawlers, about which pages or directories to crawl or index.

The syntax for a robots.txt file is simple and straightforward. Website owners can use the “Disallow” directive to specify which pages or directories should not be crawled or indexed. For example, to block Google crawlers from accessing a specific page, website owners can add the following line to their robots.txt file:

Disallow: /path/to/page.html

Website owners can also use the “Allow” directive to specify which pages or directories should be crawled or indexed. For example, to allow Google crawlers to access a specific page, website owners can add the following line to their robots.txt file:

Allow: /path/to/page.html

It’s essential to note that the robots.txt file is not a foolproof method for blocking Google crawlers. Google’s crawlers may still crawl or index pages or directories that are not explicitly excluded. To ensure that specific pages or directories are completely excluded from Google search results, website owners may need to use other methods, such as noindex and nofollow meta tags.

Learning how to create a robots.txt file is an essential skill for website owners who want to maintain control over their online presence. By creating a robots.txt file, website owners can block Google crawlers from accessing specific pages or directories on their website, effectively excluding them from Google search results. This can be particularly useful for website owners who want to protect sensitive information or remove outdated or irrelevant content from Google search results.

In addition to creating a robots.txt file, website owners can also use other methods to exclude specific pages or directories from Google search results. These methods include using noindex and nofollow meta tags, utilizing the Robots Exclusion Protocol (REP), and using URL removal tools. By combining these methods, website owners can ensure that their website is completely excluded from Google search results.

Noindex and Nofollow Meta Tags: What You Need to Know

Noindex and nofollow meta tags are two powerful tools that website owners can use to control how Google crawls and indexes their website. By using these meta tags, website owners can prevent Google from indexing specific pages or following links on their website, effectively excluding them from Google search results.

The noindex meta tag is used to prevent Google from indexing a specific page on a website. This can be useful for website owners who want to prevent sensitive information or outdated content from being indexed by Google. To use the noindex meta tag, website owners simply need to add the following code to the head section of the page they want to exclude:

The nofollow meta tag is used to prevent Google from following links on a specific page or website. This can be useful for website owners who want to prevent Google from crawling and indexing links to other websites or pages that they do not want to be associated with. To use the nofollow meta tag, website owners simply need to add the following code to the head section of the page they want to exclude:

It’s essential to note that the noindex and nofollow meta tags are not foolproof methods for excluding content from Google search results. Google’s crawlers may still crawl and index pages or links that are not explicitly excluded. To ensure that specific pages or links are completely excluded from Google search results, website owners may need to use other methods, such as the Robots Exclusion Protocol (REP) or URL removal tools.

Learning how to use noindex and nofollow meta tags is an essential skill for website owners who want to maintain control over their online presence. By using these meta tags, website owners can prevent Google from indexing specific pages or following links on their website, effectively excluding them from Google search results. This can be particularly useful for website owners who want to protect sensitive information or remove outdated or irrelevant content from Google search results.

In addition to using noindex and nofollow meta tags, website owners can also use other methods to exclude specific pages or links from Google search results. These methods include using the Robots Exclusion Protocol (REP), creating a robots.txt file, and using URL removal tools. By combining these methods, website owners can ensure that their website is completely excluded from Google search results.

When using noindex and nofollow meta tags, it’s essential to follow best practices to ensure that the tags are correctly interpreted by Google’s crawlers. This includes using the correct syntax and formatting, as well as specifying the correct pages or links to exclude. By following best practices, website owners can ensure that their website is correctly crawled and indexed by Google, while also excluding specific pages or links from Google search results.

Removing a Website from Google Search Results Using URL Removal Tools

URL removal tools are a powerful way to remove specific URLs from Google search results. These tools allow website owners to request the removal of individual URLs from Google’s index, which can be useful for removing outdated or irrelevant content, or for protecting sensitive information.

One of the most popular URL removal tools is Google’s URL removal tool. This tool allows website owners to request the removal of individual URLs from Google’s index, and can be accessed through the Google Search Console. To use the tool, website owners simply need to enter the URL they want to remove, and provide a reason for the removal request.

Another URL removal tool is the Bing URL removal tool. This tool allows website owners to request the removal of individual URLs from Bing’s index, and can be accessed through the Bing Webmaster Tools. To use the tool, website owners simply need to enter the URL they want to remove, and provide a reason for the removal request.

It’s essential to note that URL removal tools are not a foolproof method for removing content from Google search results. Google’s crawlers may still crawl and index URLs that are not explicitly excluded. To ensure that specific URLs are completely removed from Google search results, website owners may need to use other methods, such as the Robots Exclusion Protocol (REP) or noindex and nofollow meta tags.

Learning how to use URL removal tools is an essential skill for website owners who want to maintain control over their online presence. By using these tools, website owners can remove specific URLs from Google search results, effectively excluding them from Google’s index. This can be particularly useful for website owners who want to protect sensitive information or remove outdated or irrelevant content from Google search results.

In addition to using URL removal tools, website owners can also use other methods to exclude specific URLs from Google search results. These methods include using the Robots Exclusion Protocol (REP), creating a robots.txt file, and using noindex and nofollow meta tags. By combining these methods, website owners can ensure that their website is completely excluded from Google search results.

When using URL removal tools, it’s essential to follow best practices to ensure that the removal requests are correctly interpreted by Google’s crawlers. This includes using the correct syntax and formatting, as well as specifying the correct URLs to remove. By following best practices, website owners can ensure that their website is correctly crawled and indexed by Google, while also excluding specific URLs from Google search results.

Preventing Re-Crawling and Re-Indexing of Removed Content

Once you have removed content from Google’s index, it’s essential to take steps to prevent it from being re-crawled and re-indexed. This can be a challenging task, as Google’s crawlers are constantly scanning the web for new and updated content. However, by following a few best practices, you can help prevent removed content from being re-crawled and re-indexed.

One of the most effective ways to prevent re-crawling and re-indexing is to update your website’s architecture. This can include removing links to the removed content, updating your website’s navigation and internal linking structure, and ensuring that your website’s content is organized in a logical and consistent manner.

Another way to prevent re-crawling and re-indexing is to use canonical URLs. Canonical URLs are URLs that are designated as the preferred version of a webpage. By using canonical URLs, you can help Google understand which version of a webpage is the most authoritative and relevant, and prevent removed content from being re-crawled and re-indexed.

In addition to updating your website’s architecture and using canonical URLs, you can also use other methods to prevent re-crawling and re-indexing. These methods include using the Robots Exclusion Protocol (REP), creating a robots.txt file, and using noindex and nofollow meta tags. By combining these methods, you can help ensure that removed content is not re-crawled and re-indexed by Google.

It’s also important to note that preventing re-crawling and re-indexing is an ongoing process. Google’s crawlers are constantly scanning the web for new and updated content, so it’s essential to regularly monitor your website’s content and make updates as needed. By following best practices and regularly monitoring your website’s content, you can help prevent removed content from being re-crawled and re-indexed by Google.

Learning how to prevent re-crawling and re-indexing is an essential skill for website owners who want to maintain control over their online presence. By following the tips and best practices outlined in this article, you can help ensure that removed content is not re-crawled and re-indexed by Google, and maintain a clean and up-to-date online presence.

In conclusion, preventing re-crawling and re-indexing is a critical step in maintaining control over your online presence. By updating your website’s architecture, using canonical URLs, and employing other methods, you can help prevent removed content from being re-crawled and re-indexed by Google. By following these best practices, you can help ensure that your website is accurately represented in Google’s index and maintain a strong online presence.