Understanding the Need for Search Exclusions

In today’s digital age, online presence is crucial for individuals and businesses alike. However, with the vast amount of information available online, it’s not uncommon for sensitive or irrelevant content to appear in search results. This is where search exclusions come into play. Excluding certain content from Google search results can improve online privacy and search result relevance. But why is this important, and how can it be achieved?

For individuals, excluding personal data from Google search results can be a matter of online safety and security. With the rise of identity theft and cybercrime, it’s essential to protect sensitive information from prying eyes. By removing personal data from search results, individuals can reduce the risk of their information being misused.

For businesses, search exclusions can be a matter of reputation management. Irrelevant or outdated content can harm a company’s online reputation and deter potential customers. By excluding this content from search results, businesses can maintain a positive online presence and attract more customers.

So, how can you exclude something from Google search? The process may seem daunting, but with the right tools and knowledge, it’s achievable. In this article, we’ll explore the various methods for search exclusions, including using Google’s URL removal tool, robots.txt files, and meta tags. We’ll also discuss the importance of monitoring and updating your online presence to ensure that your search exclusions remain effective.

By understanding the need for search exclusions and taking control of your online presence, you can improve your online privacy and search result relevance. Whether you’re an individual or a business, it’s essential to protect your online reputation and maintain a positive presence. In the following sections, we’ll delve into the various methods for search exclusions and provide step-by-step instructions on how to achieve them.

How to Remove Personal Data from Google Search

Removing personal data from Google search results can be a daunting task, but it’s essential for maintaining online privacy and security. There are several methods to achieve this, including using Google’s URL removal tool and contacting website owners to request content removal.

Google’s URL removal tool is a straightforward way to remove personal data from search results. To use this tool, follow these steps:

1. Go to the Google Search Console and sign in to your account.

2. Click on the “Remove URLs” tab and enter the URL of the page containing your personal data.

3. Select the reason for removal and click on the “Request removal” button.

4. Verify your request by entering a CAPTCHA code and clicking on the “Submit” button.

Alternatively, you can contact the website owner or webmaster to request content removal. This method is more time-consuming, but it’s effective for removing personal data from websites that are not under your control.

To contact the website owner, follow these steps:

1. Search for the website’s contact information, usually found in the footer or “About Us” page.

2. Send an email or message to the website owner, explaining the reason for removal and providing the URL of the page containing your personal data.

3. Be polite and professional in your request, and provide any necessary documentation or evidence to support your claim.

It’s essential to note that removing personal data from Google search results may take some time, and it’s not always possible to remove all instances of your data. However, by using these methods, you can significantly reduce the visibility of your personal data online.

In addition to removing personal data, it’s also important to prevent it from appearing in search results in the first place. This can be achieved by being mindful of the information you share online and using privacy settings to control who can access your data.

By taking control of your online presence and removing personal data from Google search results, you can maintain your online privacy and security. In the next section, we’ll discuss how to use robots.txt files to block Google crawlers and exclude content from search results.

Using Robots.txt to Block Google Crawlers

Robots.txt files are a crucial component of search engine optimization (SEO) and play a significant role in controlling Google crawler access to specific web pages or directories. By using robots.txt files, you can exclude content from Google search results and prevent crawlers from indexing sensitive or irrelevant information.

A robots.txt file is a text file that is placed in the root directory of a website and contains instructions for search engine crawlers. The file tells crawlers which pages or directories to crawl and which to ignore. By using specific directives, you can block Google crawlers from accessing certain areas of your website.

Here’s an example of how to use robots.txt to block Google crawlers:

User-agent: Googlebot Disallow: /private/ Disallow: /admin/

In this example, the robots.txt file is instructing Googlebot to avoid crawling the /private/ and /admin/ directories. This means that any content within these directories will not be indexed by Google and will not appear in search results.

It’s essential to note that robots.txt files are not foolproof and can be circumvented by malicious crawlers. However, they are an effective way to communicate with legitimate crawlers like Googlebot and prevent unwanted indexing.

When using robots.txt files, it’s also important to consider the following best practices:

1. Use the correct syntax and formatting to ensure that your directives are recognized by Googlebot.

2. Be specific when blocking directories or pages to avoid accidentally blocking important content.

3. Regularly review and update your robots.txt file to ensure that it remains effective and accurate.

By using robots.txt files effectively, you can take control of your website’s crawlability and exclude sensitive or irrelevant content from Google search results. In the next section, we’ll discuss how to use meta tags to further refine your search engine exclusion strategy.

Meta Tags for Search Engine Exclusion

Meta tags are a crucial component of search engine optimization (SEO) and play a significant role in controlling how search engines crawl and index web pages. By using specific meta tags, you can exclude certain web pages from Google search results and prevent them from being crawled or indexed.

There are two primary meta tags used for search engine exclusion: “noindex” and “nofollow”. The “noindex” tag tells search engines not to index a specific web page, while the “nofollow” tag instructs search engines not to follow the links on a particular page.

To implement the “noindex” tag, simply add the following code to the

section of your web page:This will instruct search engines not to index the page and exclude it from search results.

The “nofollow” tag is used to prevent search engines from following links on a particular page. This can be useful for preventing the spread of spam or low-quality content. To implement the “nofollow” tag, add the following code to the

section of your web page:It’s essential to note that while meta tags can be an effective way to exclude content from Google search results, they are not foolproof. Search engines may still crawl and index pages that are not intended to be indexed, especially if they are linked to from other pages.

Best practices for using meta tags for search engine exclusion include:

1. Use the correct syntax and formatting to ensure that your meta tags are recognized by search engines.

2. Be specific when using meta tags to exclude content, as incorrect usage can lead to unintended consequences.

3. Regularly review and update your meta tags to ensure that they remain effective and accurate.

By using meta tags effectively, you can take control of your website’s crawlability and exclude sensitive or irrelevant content from Google search results. In the next section, we’ll discuss how to use Google Search Console to manage search result exclusions and monitor your website’s online presence.

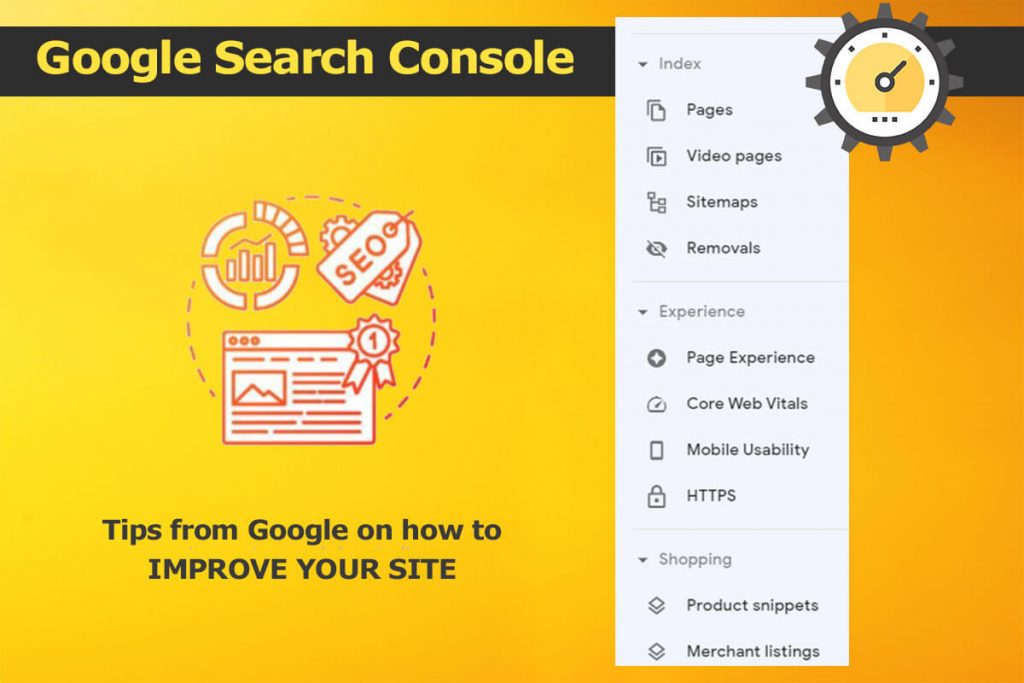

Google Search Console: A Powerful Tool for Search Exclusions

Google Search Console is a powerful tool that helps website owners manage their online presence and exclude sensitive content from Google search results. By using Search Console, you can monitor your website’s search result appearances, remove URLs, and request content removal.

To get started with Search Console, you’ll need to verify your website ownership and set up your account. Once you’ve done this, you can access a range of features and tools that can help you manage your website’s search result exclusions.

One of the most useful features of Search Console is the “Remove URLs” tool. This tool allows you to request the removal of specific URLs from Google’s index, which can be useful if you’ve removed content from your website or if you want to exclude certain pages from search results.

To use the “Remove URLs” tool, follow these steps:

1. Sign in to your Search Console account and select the website you want to manage.

2. Click on the “Remove URLs” tab and enter the URL you want to remove.

3. Select the reason for removal and click on the “Request removal” button.

4. Verify your request by entering a CAPTCHA code and clicking on the “Submit” button.

Another useful feature of Search Console is the “Search Analytics” tool. This tool provides insights into how your website is performing in search results, including the number of impressions, clicks, and average position.

By using Search Console, you can gain a better understanding of your website’s search result performance and make data-driven decisions to improve your online presence.

Best practices for using Search Console include:

1. Regularly monitor your website’s search result appearances and adjust your exclusion strategy as needed.

2. Use the “Remove URLs” tool to request the removal of sensitive or irrelevant content from Google’s index.

3. Use the “Search Analytics” tool to gain insights into your website’s search result performance and make data-driven decisions.

By following these best practices and using Search Console effectively, you can take control of your website’s online presence and exclude sensitive content from Google search results.

Common Challenges and Solutions for Search Exclusions

When trying to exclude content from Google search results, several challenges may arise. In this section, we’ll discuss some common challenges and provide solutions and workarounds to help you overcome them.

One common challenge is duplicate content. If you have multiple versions of the same content on your website, it can be difficult to exclude all of them from search results. To solve this problem, you can use the “canonical” tag to specify which version of the content is the original and should be indexed by Google.

Another challenge is incorrect URL removal. If you remove a URL from Google’s index, but the URL is still linked to from other pages on your website, it can be difficult to prevent the URL from being re-indexed. To solve this problem, you can use the “nofollow” tag to prevent Google from following links to the removed URL.

Another common challenge is the presence of sensitive information in search results. If you have sensitive information on your website, such as personal data or confidential business information, it’s essential to exclude it from search results. To solve this problem, you can use the “noindex” tag to prevent Google from indexing the sensitive information.

Finally, another challenge is the difficulty of removing content from search results that is hosted on third-party websites. If you have content hosted on a third-party website, such as a social media platform or a content sharing site, it can be difficult to remove it from search results. To solve this problem, you can contact the website owner or administrator and request that they remove the content.

By understanding these common challenges and using the solutions and workarounds provided, you can effectively exclude content from Google search results and maintain control over your online presence.

Some additional tips for overcoming common challenges include:

1. Regularly monitor your website’s search result appearances and adjust your exclusion strategy as needed.

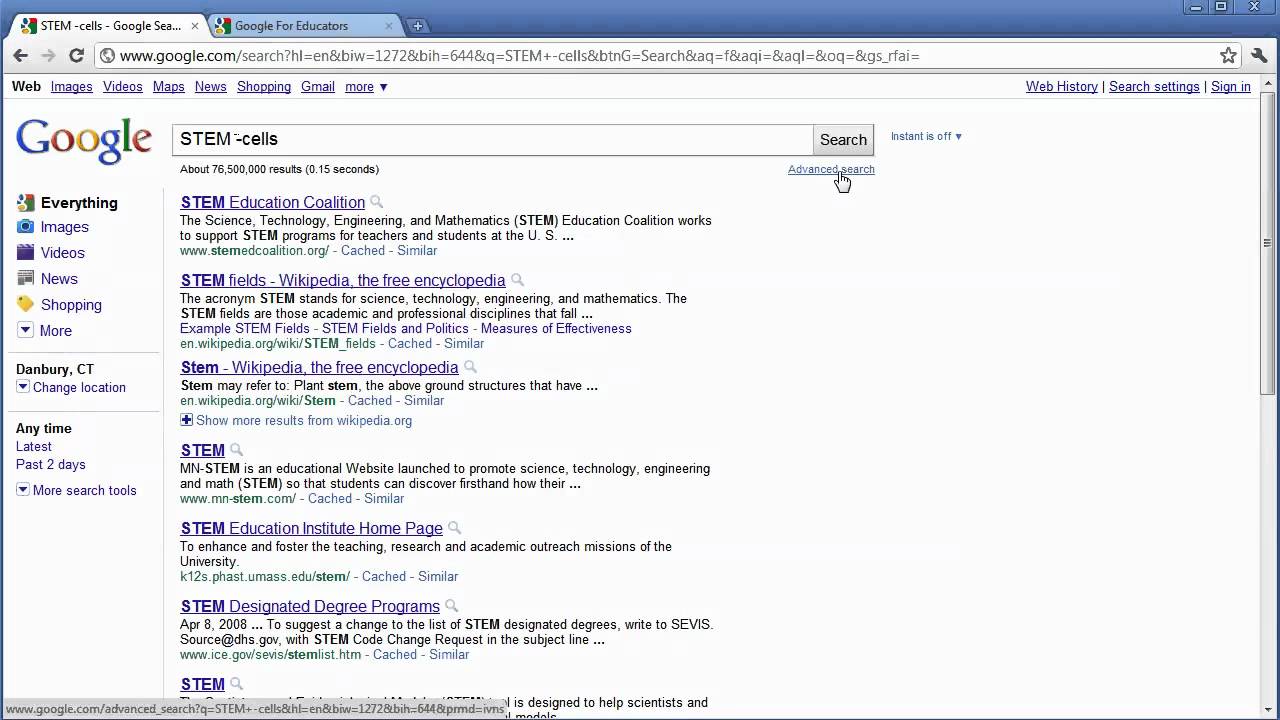

2. Use the “site:” operator to search for specific content on your website and identify potential exclusion targets.

3. Use the “info:” operator to search for specific information about your website and identify potential exclusion targets.

By following these tips and using the solutions and workarounds provided, you can effectively exclude content from Google search results and maintain control over your online presence.

Best Practices for Search Exclusion and Online Privacy

Maintaining online privacy and excluding sensitive content from Google search results is crucial in today’s digital age. By following best practices, you can ensure that your online presence is protected and that your sensitive information is not accessible to the public.

One of the most important best practices is to regularly monitor your website’s search result appearances. This can be done using Google Search Console, which provides insights into how your website is performing in search results. By monitoring your search result appearances, you can identify potential issues and take corrective action to exclude sensitive content from Google search results.

Another best practice is to use the “noindex” and “nofollow” meta tags to exclude specific web pages from Google search results. These tags can be used to prevent Google from indexing sensitive information, such as personal data or confidential business information.

It’s also essential to use the “robots.txt” file to control Google crawler access to specific web pages or directories. This file can be used to prevent Google from crawling sensitive information, such as personal data or confidential business information.

In addition to these best practices, it’s also important to regularly update your website’s content and ensure that it is accurate and relevant. This can help to improve your website’s search engine ranking and ensure that your online presence is protected.

Finally, it’s essential to be aware of the potential risks associated with online privacy and search exclusion. By being aware of these risks, you can take steps to mitigate them and ensure that your online presence is protected.

Some additional tips for maintaining online privacy and excluding sensitive content from Google search results include:

1. Use strong passwords and keep them confidential to prevent unauthorized access to your website.

2. Use encryption to protect sensitive information, such as personal data or confidential business information.

3. Regularly back up your website’s data to prevent loss in case of a security breach.

4. Use a reputable web hosting service that provides robust security measures to protect your website.

By following these best practices and tips, you can ensure that your online presence is protected and that your sensitive information is not accessible to the public.

Conclusion: Taking Control of Your Online Presence

In conclusion, excluding certain content from Google search results is crucial for maintaining online privacy and search result relevance. By understanding the importance of search exclusions and using the tools and techniques outlined in this article, you can take control of your online presence and ensure that your sensitive information is not accessible to the public.

Remember, search exclusions are not a one-time task, but rather an ongoing process that requires regular monitoring and updates. By staying vigilant and proactive, you can protect your online presence and maintain a positive reputation.

So, what are you waiting for? Take control of your online presence today and start excluding sensitive content from Google search results. Use the tools and techniques outlined in this article to get started, and don’t hesitate to reach out if you have any questions or need further assistance.

By taking control of your online presence, you can:

1. Protect your sensitive information from being accessed by the public.

2. Improve your search result relevance and online reputation.

3. Increase your online security and reduce the risk of cyber threats.

4. Enhance your overall online experience and maintain a positive online presence.

Don’t wait any longer to take control of your online presence. Start excluding sensitive content from Google search results today and protect your online reputation.