Why Your Pages Aren’t Getting Indexed: Common Pitfalls to Avoid

Creating high-quality content is a crucial step in any online marketing strategy, but it’s not uncommon for website owners to find that their pages aren’t getting indexed by Google. This can be a frustrating experience, especially when you’ve invested time and effort into crafting engaging and informative content. However, there may be new reasons why your pages aren’t getting indexed, and it’s essential to understand these reasons to improve your online visibility.

One of the primary reasons why pages don’t get indexed is due to technical issues. Google’s algorithms are designed to crawl and index pages that meet specific technical requirements, and if your website doesn’t meet these requirements, your pages may not get indexed. Common technical issues that can prevent indexing include crawl errors, duplicate content, and mobile usability problems. These issues can be resolved by conducting regular technical SEO audits and implementing fixes to ensure that your website meets Google’s technical requirements.

Another reason why pages may not get indexed is due to poor content quality. Google’s algorithms assess content quality based on various factors, including relevance, authority, and user engagement. If your content doesn’t meet these standards, it may not get indexed. To improve content quality, focus on creating engaging and informative content that resonates with your target audience. Conduct thorough keyword research to ensure that your content is relevant to your audience’s needs, and use high-quality visuals and multimedia elements to enhance user engagement.

In addition to technical issues and poor content quality, there may be new reasons why your pages aren’t getting indexed. For instance, Google’s indexing algorithm may be prioritizing pages that meet specific criteria, such as page authority, content freshness, and user behavior. Understanding these criteria can help you optimize your website and content to improve indexing. By staying up-to-date with the latest developments in Google’s indexing algorithm, you can adapt your SEO strategies to ensure continued indexing success.

Furthermore, website structure and internal linking can also impact indexing. A well-organized website structure can help Google’s algorithms crawl and index pages more efficiently, while internal linking can help to distribute link equity and improve page authority. By optimizing your website’s structure and internal linking, you can improve indexing and enhance your online visibility.

In conclusion, there are several reasons why your pages may not be getting indexed by Google. By understanding these reasons and implementing fixes to technical issues, improving content quality, and optimizing website structure and internal linking, you can improve indexing and enhance your online visibility. Stay ahead of the curve by monitoring changes in Google’s indexing algorithm and adapting your SEO strategies to ensure continued indexing success.

How to Identify and Fix Technical Issues Preventing Indexing

Technical SEO plays a crucial role in getting pages indexed by Google. However, technical issues can prevent pages from being indexed, leading to a significant decrease in online visibility. Identifying and fixing these technical issues is essential to ensure that your pages are crawled and indexed correctly.

One of the most common technical issues that can prevent indexing is crawl errors. Crawl errors occur when Google’s algorithms are unable to crawl a page due to issues such as broken links, server errors, or DNS problems. To identify crawl errors, use Google Search Console to monitor your website’s crawl data. Fixing crawl errors can be as simple as updating a broken link or resolving a server issue.

Duplicate content is another technical issue that can prevent indexing. Duplicate content occurs when multiple pages on your website have the same or similar content. To avoid duplicate content, use canonical URLs to specify the preferred version of a page. You can also use the “rel=canonical” tag to indicate the original source of the content.

Mobile usability problems can also prevent indexing. With the majority of users accessing websites through mobile devices, it’s essential to ensure that your website is mobile-friendly. Use Google’s Mobile-Friendly Test tool to identify mobile usability issues and make the necessary changes to improve your website’s mobile usability.

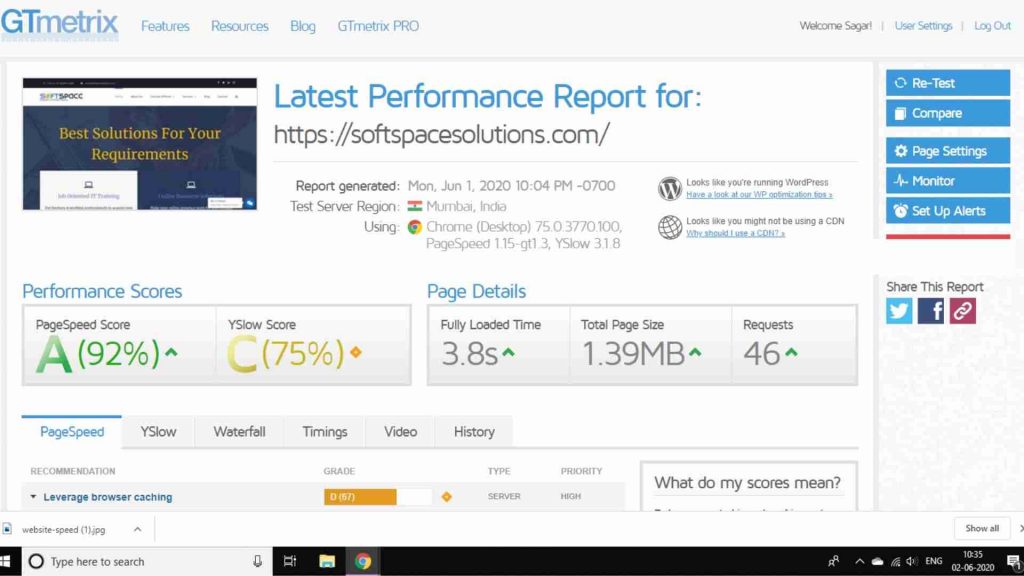

In addition to crawl errors, duplicate content, and mobile usability problems, other technical issues can prevent indexing. These include issues such as page speed, SSL certificates, and XML sitemaps. To identify and fix these technical issues, use tools such as Google PageSpeed Insights, SSL Labs, and XML Sitemap generators.

By identifying and fixing technical issues, you can improve your website’s crawlability and indexing. This can lead to increased online visibility, improved search engine rankings, and more traffic to your website. Remember to regularly monitor your website’s technical SEO to ensure that you’re not missing out on any opportunities to improve your online presence.

Furthermore, staying up-to-date with the latest technical SEO best practices can help you avoid common pitfalls that can prevent indexing. This includes staying informed about the latest Google algorithm updates, using the latest technical SEO tools, and attending industry conferences and workshops.

By prioritizing technical SEO and staying ahead of the curve, you can ensure that your pages are indexed correctly and that your online presence is optimized for success.

The Impact of Content Quality on Indexing: What You Need to Know

Content quality plays a significant role in getting pages indexed by Google. The search engine’s algorithms assess content quality based on various factors, including relevance, authority, and user engagement. To improve content quality, it’s essential to understand how Google’s algorithms evaluate content and provide guidance on how to create high-quality, engaging content that resonates with users.

Google’s algorithms use various signals to assess content quality, including the content’s relevance to the user’s search query, the authority of the content, and the user’s engagement with the content. To create high-quality content, focus on providing value to the user, rather than trying to manipulate the algorithms. This can be achieved by conducting thorough keyword research, creating engaging and informative content, and using high-quality visuals and multimedia elements.

One of the key factors that Google’s algorithms use to assess content quality is relevance. To ensure that your content is relevant to the user’s search query, use keywords strategically throughout the content, including in the title, meta description, and headings. However, avoid keyword stuffing, as this can lead to penalties and negatively impact content quality.

Authority is another critical factor that Google’s algorithms use to assess content quality. To establish authority, create high-quality, engaging content that provides value to the user. This can be achieved by using expert opinions, citing credible sources, and providing unique insights and perspectives. Additionally, use internal and external linking to establish relationships between content and demonstrate expertise.

User engagement is also an essential factor that Google’s algorithms use to assess content quality. To improve user engagement, focus on creating content that resonates with users, including content that is informative, entertaining, and engaging. Use high-quality visuals and multimedia elements, such as images, videos, and infographics, to enhance user engagement and provide a better user experience.

Furthermore, content freshness is also an important factor that Google’s algorithms use to assess content quality. To ensure that your content is fresh and up-to-date, regularly update and refresh content, including blog posts, articles, and other types of content. This can help to improve content quality and increase the chances of getting pages indexed.

By understanding how Google’s algorithms assess content quality and providing guidance on how to create high-quality, engaging content, you can improve content quality and increase the chances of getting pages indexed. Remember to focus on providing value to the user, rather than trying to manipulate the algorithms, and use various signals, such as relevance, authority, and user engagement, to establish high-quality content.

Understanding Google’s Indexing Priorities: A Deep Dive

Google’s indexing algorithm is a complex system that determines which pages to index and when. While the exact inner workings of the algorithm are not publicly disclosed, there are certain factors that are known to influence indexing priorities. Understanding these factors is crucial for webmasters and SEOs who want to ensure their pages are indexed correctly.

One of the primary factors that affects indexing priorities is page authority. Pages with high authority, as measured by metrics such as PageRank and Domain Authority, are more likely to be indexed quickly and frequently. This is because Google views these pages as more trustworthy and relevant to users.

Content freshness is another important factor in indexing priorities. Google favors pages with fresh, up-to-date content over those with stale or outdated information. This is why it’s essential to regularly update and refresh your content to signal to Google that your page is active and worthy of indexing.

User behavior also plays a significant role in indexing priorities. Google takes into account how users interact with your page, including metrics such as click-through rate, bounce rate, and time on page. Pages that engage users and provide a positive experience are more likely to be indexed and ranked highly.

In addition to these factors, Google also considers the overall quality and relevance of your content. Pages that are well-written, informative, and relevant to the user’s search query are more likely to be indexed and ranked highly. On the other hand, pages with low-quality or irrelevant content may be ignored or penalized by Google’s algorithm.

Another new reason preventing your pages from being indexed is the increasing importance of entity-based indexing. Google is shifting its focus from keyword-based indexing to entity-based indexing, which means it’s looking for pages that provide comprehensive and accurate information about specific entities, such as people, places, and things. Pages that fail to provide this type of information may struggle to get indexed.

Finally, it’s worth noting that Google’s indexing priorities can vary depending on the specific search query and user intent. For example, pages that are optimized for long-tail keywords may be more likely to be indexed for those specific queries, while pages that are optimized for broad keywords may be more likely to be indexed for more general queries.

By understanding these factors and how they influence indexing priorities, webmasters and SEOs can optimize their pages to improve their chances of getting indexed by Google. Whether it’s through improving page authority, content freshness, or user engagement, there are many ways to signal to Google that your page is worthy of indexing.

How to Optimize Your Website’s Structure for Better Indexing

A well-structured website is essential for getting your pages indexed by Google. A clear and organized site architecture helps search engines understand the hierarchy and relevance of your content, making it more likely to be indexed and ranked highly. In this section, we’ll explore the key elements of website structure that impact indexing and provide tips on how to optimize your site for better indexing.

Information architecture is the foundation of a well-structured website. It refers to the way you organize and categorize your content to make it easily accessible to users and search engines. A good information architecture should be intuitive, logical, and consistent throughout your site. This can be achieved by using a clear and concise navigation menu, breadcrumb trails, and a consistent URL structure.

URL structure is another critical aspect of website structure that affects indexing. Google recommends using descriptive and concise URLs that accurately reflect the content of the page. This helps search engines understand the relevance and context of your content, making it more likely to be indexed. Avoid using generic or duplicate URLs, and instead opt for descriptive URLs that include target keywords.

Internal linking is also essential for website structure and indexing. Internal linking helps search engines understand the relationships between different pages on your site and can improve the visibility of your content. Use descriptive anchor text for your internal links, and avoid over-linking or using generic anchor text like “click here.”

In addition to these structural elements, it’s also important to consider the overall user experience of your website. A website with a poor user experience can negatively impact indexing, as search engines take into account metrics like bounce rate and time on page. Ensure that your website is mobile-friendly, has fast loading speeds, and provides a clear and concise user interface.

A new reason preventing your pages from being indexed is the increasing importance of entity-based indexing. Google is shifting its focus from keyword-based indexing to entity-based indexing, which means it’s looking for pages that provide comprehensive and accurate information about specific entities, such as people, places, and things. A well-structured website that provides clear and concise information about entities can improve indexing and ranking.

By optimizing your website’s structure for better indexing, you can improve the visibility and ranking of your content. Remember to focus on creating a clear and organized site architecture, using descriptive URLs, and internal linking to help search engines understand the relevance and context of your content. By following these tips, you can improve your website’s indexing and drive more traffic to your site.

Furthermore, it’s essential to regularly review and update your website’s structure to ensure it remains optimized for indexing. This can involve conducting regular technical SEO audits, monitoring website analytics, and making adjustments to your site architecture as needed. By staying on top of website structure and indexing, you can ensure your content remains visible and accessible to search engines and users alike.

The Role of Links in Getting Your Pages Indexed: A Comprehensive Guide

Links play a crucial role in getting pages indexed by Google. While high-quality content is essential, links help search engines understand the relevance and authority of a webpage. In this section, we will delve into the importance of links in indexing and provide guidance on how to build high-quality links that can improve indexing.

Internal linking is a critical aspect of link building. It helps search engines understand the structure of a website and the relationships between different pages. By linking to relevant and useful content within a website, webmasters can help Google’s crawlers navigate and index pages more efficiently. When building internal links, it’s essential to use descriptive anchor text that accurately reflects the content of the linked page.

External linking is also vital for indexing. When a webpage links to high-quality, relevant content on other websites, it helps search engines understand the context and relevance of the page. This can improve the page’s authority and increase the chances of getting indexed. However, it’s essential to avoid over-linking, as this can lead to penalties and decreased indexing.

Link equity is another critical factor in indexing. Link equity refers to the value that a link passes from one webpage to another. When a high-authority webpage links to a lower-authority webpage, it passes link equity, which can improve the lower-authority page’s indexing. Building high-quality links from authoritative sources can significantly improve a webpage’s indexing.

So, how can webmasters build high-quality links that improve indexing? Here are some tips:

- Create high-quality, engaging content that attracts links from other websites.

- Participate in guest blogging and content collaborations to build relationships with other webmasters and earn links.

- Use resource pages to link to high-quality content on other websites and attract links in return.

- Avoid link schemes and manipulative tactics, as these can lead to penalties and decreased indexing.

By understanding the role of links in indexing and building high-quality links, webmasters can improve their webpage’s visibility and increase the chances of getting indexed by Google. Remember, links are just one aspect of a comprehensive SEO strategy. By combining high-quality content, technical optimization, and link building, webmasters can unlock the secrets to getting their pages indexed and improve their online visibility.

Common Content Types That Are Difficult to Get Indexed: Solutions and Workarounds

While Google’s indexing algorithm has become increasingly sophisticated, there are still certain content types that can be challenging to get indexed. In this section, we will explore common content types that are difficult to get indexed, and provide solutions and workarounds to help improve their visibility.

Videos are one of the most common content types that can be difficult to get indexed. This is because Google’s crawlers have limited ability to crawl and index video content. However, there are several workarounds that can help improve video indexing. One solution is to provide a transcript of the video content, which can be crawled and indexed by Google. Additionally, using schema markup can help Google understand the context and content of the video.

Images are another content type that can be challenging to get indexed. While Google’s image search is highly advanced, it can still be difficult to get images indexed, especially if they are not properly optimized. To improve image indexing, it’s essential to use descriptive alt tags and file names that accurately reflect the content of the image. Additionally, using schema markup can help Google understand the context and content of the image.

PDFs are also a common content type that can be difficult to get indexed. This is because Google’s crawlers have limited ability to crawl and index PDF content. However, there are several workarounds that can help improve PDF indexing. One solution is to convert PDFs to HTML, which can be crawled and indexed by Google. Additionally, using schema markup can help Google understand the context and content of the PDF.

Other content types that can be challenging to get indexed include audio files, podcasts, and interactive content. However, by using creative workarounds and optimization techniques, it’s possible to improve the indexing of these content types. For example, providing a transcript of audio content or using schema markup can help Google understand the context and content of the audio file.

So, what can webmasters do to improve the indexing of difficult content types? Here are some tips:

- Use descriptive and accurate metadata, such as alt tags and file names, to help Google understand the context and content of the page.

- Provide transcripts or summaries of video and audio content to help Google crawl and index the content.

- Use schema markup to help Google understand the context and content of the page.

- Optimize images and videos by using descriptive file names and alt tags.

- Consider converting PDFs to HTML to improve indexing.

By using these workarounds and optimization techniques, webmasters can improve the indexing of difficult content types and increase their online visibility. Remember, indexing is just one aspect of a comprehensive SEO strategy. By combining high-quality content, technical optimization, and link building, webmasters can unlock the secrets to getting their pages indexed and improve their online success.

Staying Ahead of the Curve: How to Monitor and Adapt to Changes in Google’s Indexing Algorithm

Google’s indexing algorithm is constantly evolving, and it’s essential to stay up-to-date with the latest changes to ensure continued indexing success. In this section, we will explore the importance of monitoring algorithm updates and adapting SEO strategies to stay ahead of the curve.

Google’s algorithm updates can have a significant impact on indexing, and it’s crucial to monitor these changes to avoid being caught off guard. One way to stay informed is to follow Google’s official blog and social media channels, where they often announce updates and provide guidance on how to adapt. Additionally, using tools such as Google Search Console and Google Analytics can help webmasters stay on top of algorithm changes and identify potential issues.

Another way to stay ahead of the curve is to participate in online communities and forums, where SEO experts and webmasters share their experiences and insights on the latest algorithm updates. This can be a valuable resource for staying informed and learning from others who have already adapted to the changes.

So, how can webmasters adapt their SEO strategies to stay ahead of the curve? Here are some tips:

- Stay informed about the latest algorithm updates and changes.

- Use tools such as Google Search Console and Google Analytics to monitor website performance and identify potential issues.

- Participate in online communities and forums to stay informed and learn from others.

- Be prepared to adapt SEO strategies quickly in response to algorithm changes.

- Focus on creating high-quality, engaging content that resonates with users.

By staying informed and adapting to changes in Google’s indexing algorithm, webmasters can ensure continued indexing success and stay ahead of the competition. Remember, indexing is just one aspect of a comprehensive SEO strategy. By combining high-quality content, technical optimization, and link building, webmasters can unlock the secrets to getting their pages indexed and improve their online success.

One of the new reasons preventing your pages from being indexed is the increasing importance of user experience and mobile-friendliness. With the majority of users accessing the web through mobile devices, Google is prioritizing websites that provide a seamless and intuitive user experience. To adapt to this change, webmasters should focus on creating mobile-friendly websites with fast loading speeds and easy navigation.

Another new reason is the growing importance of entity-based optimization. Google is increasingly using entity-based optimization to understand the context and relevance of web pages. To adapt to this change, webmasters should focus on creating content that is optimized for entities, such as people, places, and things.

By staying informed and adapting to these changes, webmasters can ensure continued indexing success and stay ahead of the curve.